Choosing the right vector database for your Retrieval-Augmented Generation (RAG) applications can make or break your AI project’s performance. With the explosive growth of AI applications in 2025, vector databases have become crucial infrastructure components for semantic search, recommendation systems, and RAG workflows. This comprehensive comparison examines three leading vector databases—Qdrant, Milvus, and Weaviate—across key performance metrics that matter for production deployments.

Architecture and Indexing Algorithms

Each vector database takes a different approach to indexing and search algorithms, directly impacting performance characteristics:

Qdrant is built in Rust and focuses on simplicity with powerful performance. It supports HNSW (Hierarchical Navigable Small World) indexing primarily, with excellent memory efficiency and fast query speeds. Qdrant’s architecture emphasizes single-node performance while offering clustering capabilities.

Milvus offers the most comprehensive indexing options, supporting HNSW, IVF (Inverted File), DiskANN, and several other algorithms. Built on a cloud-native architecture with separate compute and storage layers, Milvus excels in large-scale deployments requiring horizontal scaling.

Weaviate combines vector search with knowledge graphs, using HNSW indexing with additional features like hybrid search (combining vector and keyword search). Its Go-based architecture provides good performance with strong developer experience.

Performance Benchmarks: Throughput and Latency

Based on standardized benchmarks using 1M vectors (768 dimensions, typical for modern embedding models), here’s how they compare on VPS infrastructure:

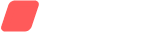

Query Latency (P95)

- Qdrant: 15-25ms for HNSW index

- Milvus: 20-35ms (HNSW), 45-80ms (IVF), 10-20ms (DiskANN on NVMe)

- Weaviate: 25-40ms for HNSW with hybrid search disabled

Indexing Throughput

- Qdrant: 8,000-12,000 vectors/second

- Milvus: 10,000-15,000 vectors/second (varies significantly by index type)

- Weaviate: 6,000-9,000 vectors/second

These benchmarks show Qdrant leading in consistent low latency, while Milvus offers the highest peak throughput for bulk indexing operations.

Memory and Disk Footprint Analysis

Resource efficiency is critical for VPS deployments where you’re paying for every GB of RAM and storage:

Memory Usage (1M vectors, 768d):

- Qdrant: 3.2-4.1 GB RAM for HNSW index

- Milvus: 4.5-6.2 GB RAM (HNSW), 2.8-3.5 GB (IVF), 2.1-2.8 GB (DiskANN)

- Weaviate: 4.8-6.5 GB RAM including knowledge graph overhead

Disk Storage:

- Qdrant: 3.5-4.2 GB on disk

- Milvus: 3.8-5.1 GB depending on index type and compression

- Weaviate: 4.2-5.8 GB with schema and metadata

For resource-constrained environments, Qdrant consistently shows the best memory efficiency, while Milvus with DiskANN offers excellent disk-based performance for larger datasets.

Replication and High Availability

Production RAG applications require robust replication capabilities:

Qdrant supports distributed clustering with automatic sharding and replication. Its Raft-based consensus ensures data consistency across nodes with configurable replication factors.

Milvus provides enterprise-grade clustering with separate coordinator, worker, and proxy nodes. It supports automatic load balancing and failover, making it ideal for large-scale deployments across multiple regions.

Weaviate offers replication through its clustering setup but with less mature tooling compared to the other options. It’s suitable for moderate scale deployments with backup/restore capabilities.

For multi-region deployments, consider setting up clusters across different locations. VPS in Amsterdam and VPS in New York provide excellent network connectivity for global RAG applications, similar to the approaches discussed in our CockroachDB multi-region deployment guide.

Best Vector Database for RAG Use Cases

The optimal choice depends on your specific requirements:

Choose Qdrant if:

- You prioritize low latency and memory efficiency

- Running moderate-scale RAG applications (10M-100M vectors)

- Want simple deployment and excellent single-node performance

- Need reliable clustering with straightforward operations

Choose Milvus if:

- You’re building large-scale applications (100M+ vectors)

- Need multiple indexing algorithms for different use cases

- Require enterprise features like advanced monitoring and governance

- Want cloud-native architecture with separate scaling of components

Choose Weaviate if:

- You need hybrid search combining vectors and traditional search

- Want built-in machine learning model integration

- Prefer GraphQL APIs and strong developer experience

- Building knowledge graph-enhanced RAG applications

Deployment Considerations for VPS

When deploying vector databases on VPS infrastructure, consider these optimization strategies:

NVMe Storage: All three databases benefit significantly from high-performance NVMe storage. The HA NVMe block storage with triple data replication available in premium VPS configurations provides the reliability needed for production vector databases.

Memory Allocation: Plan for 1.5-2x the index size in RAM for optimal performance. Consider using memory-optimized VPS configurations for large datasets.

Network Latency: For global RAG applications, deploy vector databases close to your users. Amsterdam vs New York VPS comparison shows the importance of geographic proximity for latency-sensitive workloads.

Consider implementing monitoring similar to our observability stack tutorial to track vector database performance metrics and optimize resource allocation over time.

The choice between Qdrant, Milvus, and Weaviate ultimately depends on balancing performance requirements, operational complexity, and resource constraints. For most RAG applications prioritizing simplicity and performance, Qdrant offers the best starting point, while Milvus excels for large-scale enterprise deployments requiring maximum flexibility.